Dynamic DAG Generation in Airflow: Simplifying with For Loops

Introduction

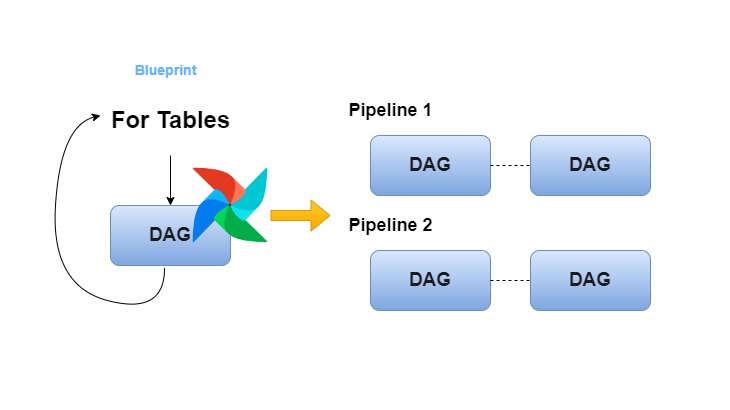

Managing multiple workflows in Apache Airflow can be a daunting task, especially when you’re working with a large number of similar data pipelines. Imagine a scenario where you need to create a separate DAG for each data source or table. Manually writing these DAGs would not only be repetitive but also error-prone. Fortunately, Airflow allows us to generate DAGs dynamically using Python, which is one of its most powerful features.

In this blog, we’ll explore how to leverage for loops to dynamically generate Airflow DAGs. We’ll discuss the benefits, best practices, and walk through an example that showcases how this technique can simplify workflow management.

Why Dynamic DAG Generation?

1. Scalability

Dynamic DAG generation allows you to handle a growing number of similar tasks without duplicating code. If you have dozens (or hundreds) of pipelines, you can create them programmatically, reducing maintenance overhead.

2. Consistency

When you dynamically generate DAGs, you ensure that they all follow the same structure and best practices. This consistency reduces errors and makes debugging easier.

3. Ease of Maintenance

Any change to the DAG logic (e.g., modifying task dependencies) can be applied universally by updating the base code, without the need to manually modify each DAG.

Dynamic DAG Generation Using For Loops

Let’s dive into how we can achieve this. We’ll start with a hypothetical use case: suppose we need to load data from multiple tables into a data warehouse, and each table requires its own DAG.

Step 1: Define a List of Tables

First, we define the list of tables or data sources for which we want to create DAGs.

tables = ['sales', 'customers', 'products', 'inventory']Step 2: Create a Function to Generate a DAG

Next, we define a function that will generate a DAG for a given table. This function will include all the necessary tasks and logic.

from airflow import DAG

from airflow.operators.dummy_operator import DummyOperator

from airflow.operators.python_operator import PythonOperator

from datetime import datetime, timedelta

def generate_dag(dag_id, schedule, default_args, table_name):

with DAG(dag_id, schedule_interval=schedule, default_args=default_args) as dag:

start = DummyOperator(task_id='start')

process_table = PythonOperator(

task_id=f'process_{table_name}',

python_callable=lambda: print(f"Processing table {table_name}")

)

end = DummyOperator(task_id='end')

# Setting task dependencies

start >> process_table >> end

return daStep 3: Use a For Loop to Dynamically Create DAGs

Now, we’ll use a for loop to create a DAG for each table in our list. We’ll register these DAGs to Airflow’s scheduler using the globals() method.

# Default arguments for all DAGs

default_args = {

'owner': 'airflow',

'depends_on_past': False,

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

'retry_delay': timedelta(minutes=5),

'start_date': datetime(2023, 1, 1),

}

# Schedule interval for all DAGs

schedule = '@daily'

# Loop through the tables and create a DAG for each

for table in tables:

dag_id = f'load_{table}_dag'

globals()[dag_id] = generate_dag(dag_id, schedule, default_args, table)How It Works:

generate_dagFunction: This function defines the structure of each DAG. It includes a start task, a processing task specific to the table, and an end task.

- For Loop: We iterate over the list of tables and dynamically generate a DAG for each one.

globals()Function: This function ensures that each generated DAG is registered with Airflow.

Discussion: Key Concepts and Best Practices

1. Modularity

Keeping the generate_dag function modular ensures that any changes to task logic can be made in one place. This reduces the likelihood of inconsistencies.

2. Avoid DAG Overload

While dynamic DAG generation is powerful, creating too many DAGs might overwhelm the Airflow scheduler. Group similar tables or tasks if possible to reduce the number of DAGs.

3. Unique DAG IDs

Ensure that each dynamically generated DAG has a unique ID. In our example, the dag_id is based on the table name, which guarantees uniqueness.

4. Testing

Always test your dynamic DAG generation logic before deploying it to production. Airflow provides a CLI command (airflow dags list) to verify that the DAGs are correctly registered.

5. Dynamic Task Creation

If your use case involves different tasks for each DAG, you can dynamically generate tasks within the generate_dag function as well. For example, if each table requires a specific set of data transformation tasks, you can define these tasks in a similar loop within the DAG function.

A Real-World Example: Processing Multiple Data Sources

Let’s extend our example to a more realistic scenario where each table requires three tasks:

- Extract data from a source

- Transform data

- Load data into a warehouse

Here’s how the generate_dag function could look:

def generate_dag(dag_id, schedule, default_args, table_name):

with DAG(dag_id, schedule_interval=schedule, default_args=default_args) as dag:

start = DummyOperator(task_id='start')

extract = PythonOperator(

task_id=f'extract_{table_name}',

python_callable=lambda: print(f"Extracting data from {table_name}")

)

transform = PythonOperator(

task_id=f'transform_{table_name}',

python_callable=lambda: print(f"Transforming data for {table_name}")

)

load = PythonOperator(

task_id=f'load_{table_name}',

python_callable=lambda: print(f"Loading data for {table_name} into warehouse")

)

end = DummyOperator(task_id='end')

# Setting task dependencies

start >> extract >> transform >> load >> end

return dagThe for loop remains the same, dynamically generating a DAG for each table and registering it with Airflow.

Conclusion

Dynamic DAG generation in Airflow is a game-changer for managing large-scale data workflows. By leveraging for loops and Python’s dynamic capabilities, you can automate the creation of DAGs, maintain consistency across pipelines, and significantly reduce manual effort.

This approach is particularly beneficial for organizations dealing with numerous data sources or tables, ensuring that workflows are scalable, maintainable, and robust.

Whether you're a data engineer or a data scientist, understanding dynamic DAG generation will make your workflow orchestration more efficient and adaptable to growing data needs. Try implementing it in your projects and experience the power of automation firsthand!